In this article, I am going to illustrate some of the works around music information that I work since the past few weeks applicated to a specific game Beat Saber.

Beat Saber kezako?

Beat Saber is a Virtual Reality (VR) rhythm game released in 2018 by the Beat Games who, by the way, has been recently bought by Facebook. The principle of the game is in your VR headset you are immersed in a 3d world where you have to destroy the blocks and avoid obstacles (align with the song played). I invite you to watch the last video that they release on their youtube channel to understand the logic behind the game.

For me, this is THE GAME THAT IS SHOWING HOW VR IS COOL, and it’s a must-try for the one that is curious about VR (the gamer is available on most of the headset of the market).

The game has a list of tracks that have been made by the beat games, and they are cool, but on PC there is a community that is developing their tracks for the game (UGC) with the song of their choice, the principal place where this user is posting content is bsaber.com.

The website seems to be tolerated by Beat Games, and I think that is making the game popular.

I decided a few weeks ago to build a scraper of the songs on this website (same work that on my previous article, still not the owner so not sharing ) for multiple things :

- In this place, I have a marketplace where I have users that are proposing and rating content, so a perfect place to build a dataset around recommendation engine

- When I looked at the massive catalogue of the website, most of my preferred songs were not here, so why not building from the current songs available an ML system that could build levels based on the song that you want to use.

On this last point, when I presented my idea to some of my friends, they redirect me to the work of OXAI and their DeepSaber project, and yes, it’s that (it’s incredible what they have achieved). I am planning to use the work that they have done to help me to build my system in the future (but still need to ramp up on deep learning).

My first assumption to work on the recommender and generator was to analyze directly the tracks and songs produced by the community, lets’ start by the song.

Description of the situation

One of the most popular packages in Python to do music analysis is called libROSA, and I am inviting you to watch the talk that has been done by Brian McFee on the package.

For this current article, I am using version 0.7 of the package.

To illustrate the concept around music analysis, my primary references are :

- The definitions/schema found in the book fundamentals of music processing of Meinard Muller that seems to be the reference in the domain of music processing

- The Youtube channel 3Blue1Brown that is doing a fantastic job to explain scientific concepts (God I wished that I had this kind of resources when I was a student in engineering)

- This excellent repository made by Steve Tjoa, an engineer of Google that I am highly recommending; that is mixing libROSA, the book of Mueller and other resources around music information retrieval.

I decided to choose two songs to be my ginny pigs :

- One of the coolest electro song released during the last decade was Midnight city of M83

- The other one, you are going to hate me is Toss a coin to your Witcher of Sonya Belousova & Giona Ostinelli (I know the song was out of your head, but now it’s back)

These two songs have different genres, and I think they could be a good start because their user reviews are goods on bsaber.com.Let’s start the analysis of the songs.

Music information retrieval

The song that used in the background of a level in Beat Saber can be seen (like all the songs) has an audio signal. Let’s see some concepts.

Amplitude

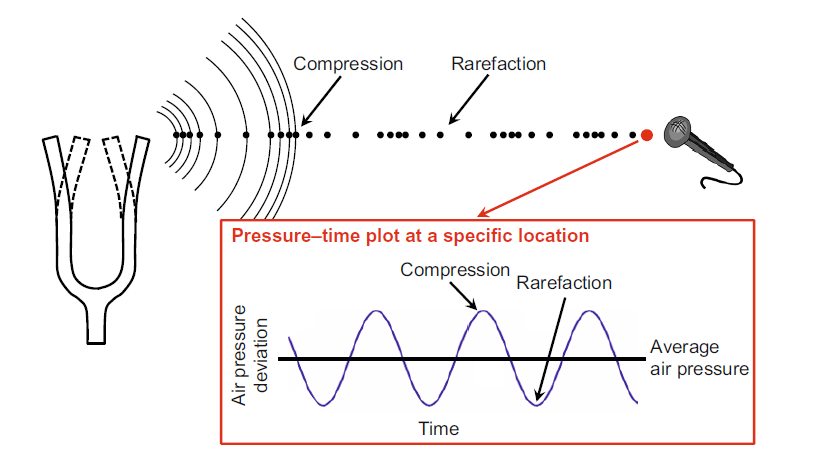

The sound generates a vibrating object that is causing displacement and oscillation of air molecules that are producing local regions of compression and rarefaction, in the book of Mueller, there is a clear illustration of that phenomenon.

The sound recording is equivalent to catch the air pressure oscillations at a specific moment (the periodicity of the capture is called sampling rate sr). In LibROSA to build this kind of diagram, you can use this command:

import librosa

# To load the file, and get the amplitude measured with the sampling rate

amplitude, sr = librosa.load(path_song)

# To plot the pressure-time plot

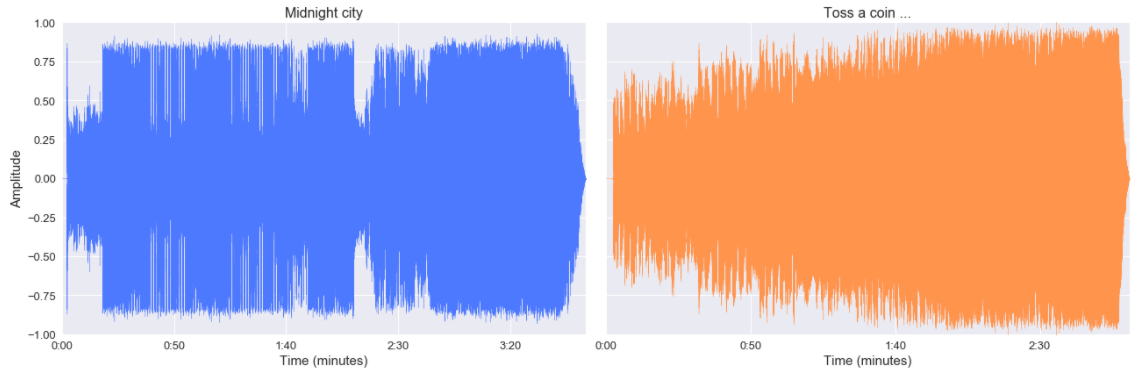

librosa.display.waveplot(amplitude, sr=sr) And for our two songs, there is their pressure-time plot.

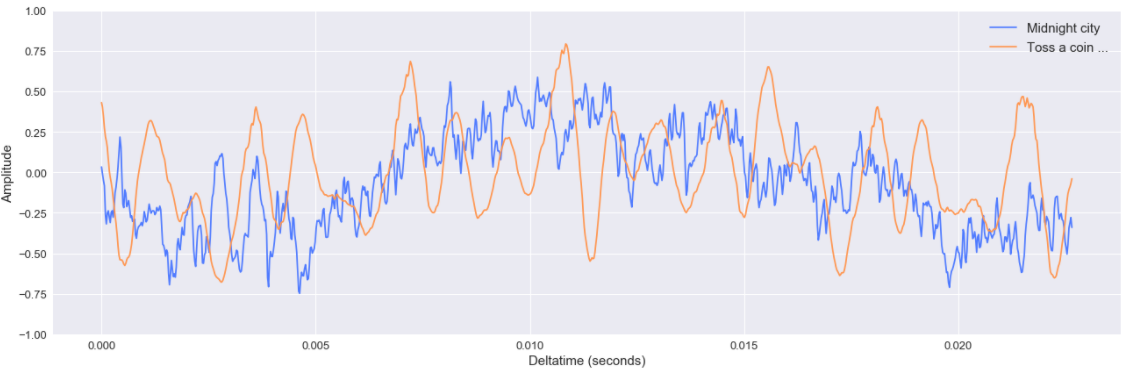

As we can see, the two songs have different waveforms, the song of M83 looks to have more phasis that the one from the witcher (with “calm” periods in the beginning and around two minutes). This visualization is offering a good illustration, but let’s zoom in (in the middle of the songs).

As we can see Midnight city looks more complex (noisy ?) in term of oscillation of the amplitude that the one from the witcher (but still remember that’s on a specific period of the song)

So now we have something more tangible to analyze with Beat Saber level, but one crucial fact is that these signals are quite complex, and maybe it could be useful to make them simpler so let’s call my friend Fourier.

Fourier transform

The principle behind the Fourier transform is to decompose a complex signal in combination with multiple signals. I don’t want to do all the theory behind the process(school nightmare), but I am inviting you to watch an excellent resource made by 3Blue1Brown on the subject (thanks to my colleagues in the UK for the link) it’s 20 minutes but worth it.

The idea with the transform is to detect the simple signal hidden in the song and estimate their intensity. To leverage this operation with libROSA, you can easily use this piece of code.

###

# Build the Fourier trasnform

X = librosa.stft(amplitude)

# Apply a conversion from raw amplitude to decibel

Xdb = librosa.amplitude_to_db(abs(X))

# Build a spectrogram

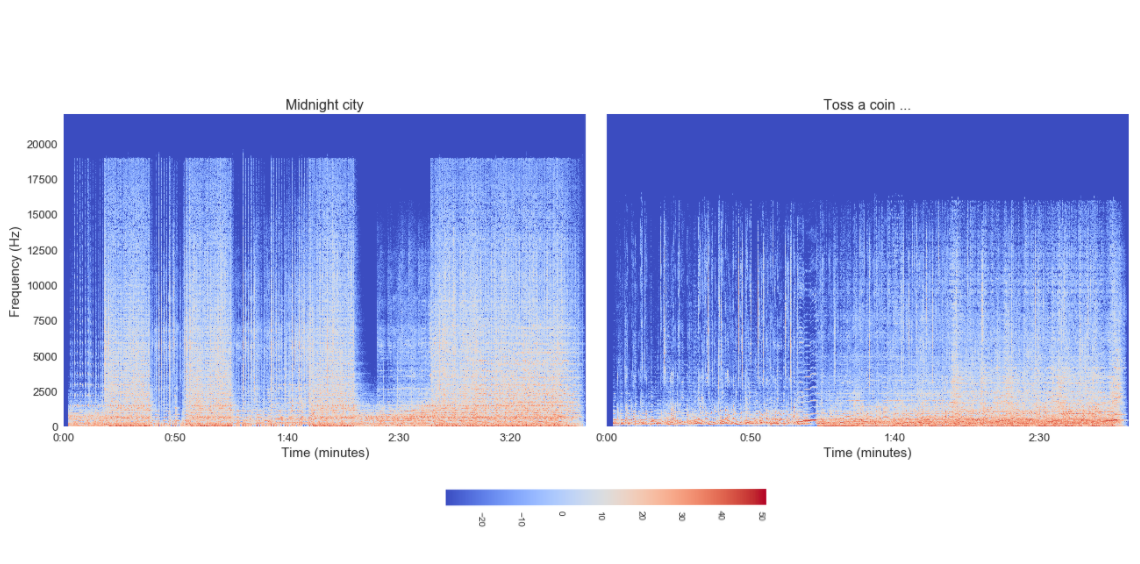

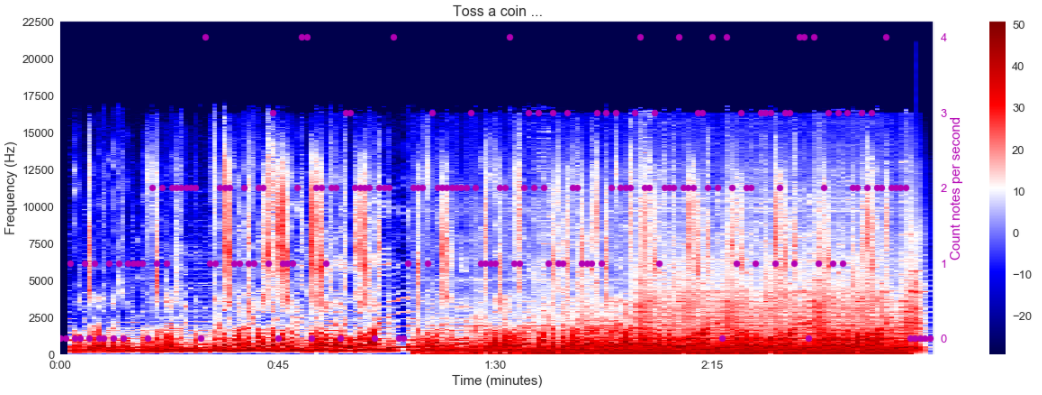

librosa.display.specshow(Xdb, sr=sr, x_axis="time", y_axis="hz")This code provides us with a graph called spectrogram that is a representation of the time versus a frequency with a colour scale related to the power of the signal (defined by the frequency). There is the spectrogram for our two songs.

As we can see, the two songs are sharing a very different spectrogram:

- Midnight city looks to be a song that is composed of signals with higher frequency than the one from the witcher

- The witcher looks noisier than Midnight city; I don’t know how to explain what I am seeing

This kind of silhouette is present in the witcher but not as this one. But still, the signal even decomposes complicated to analyze (because of his complexity). Why not decompose it between accompaniment and vocals.

Vocals and accompaniment splitting

To do that, I could have used premade scripts that can de found on libROSA package, but my research, when I started to work on this project, led me to a tech blog article from Deezer that open-sourced a tool call spleeter.

Deezer released this end of 2019 built on TensorFlow v1. I didn’t find the paper or the presentation made during ISMIR 2019 (big conference around music information retrieval). If you want to hear the results of the separation (and that is good) on Midnight city and toss a coin, you need to go to this link to download the separate files in the spleeter folder.

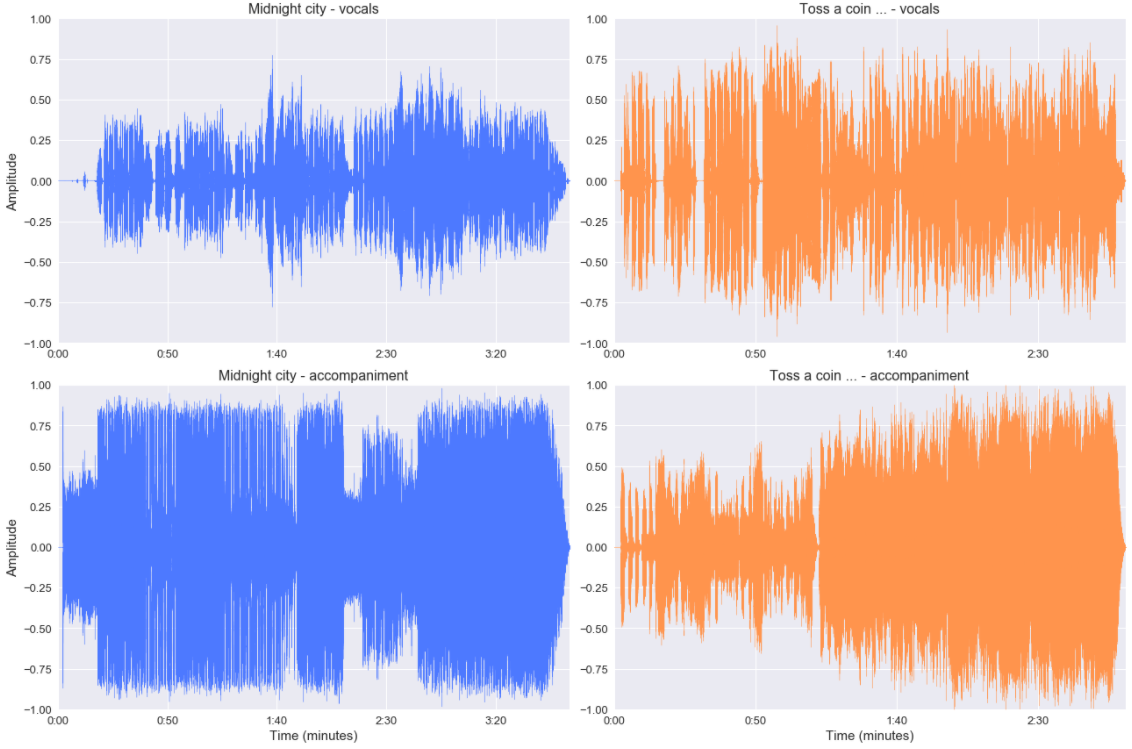

What is the impact of an amplitude point of view?

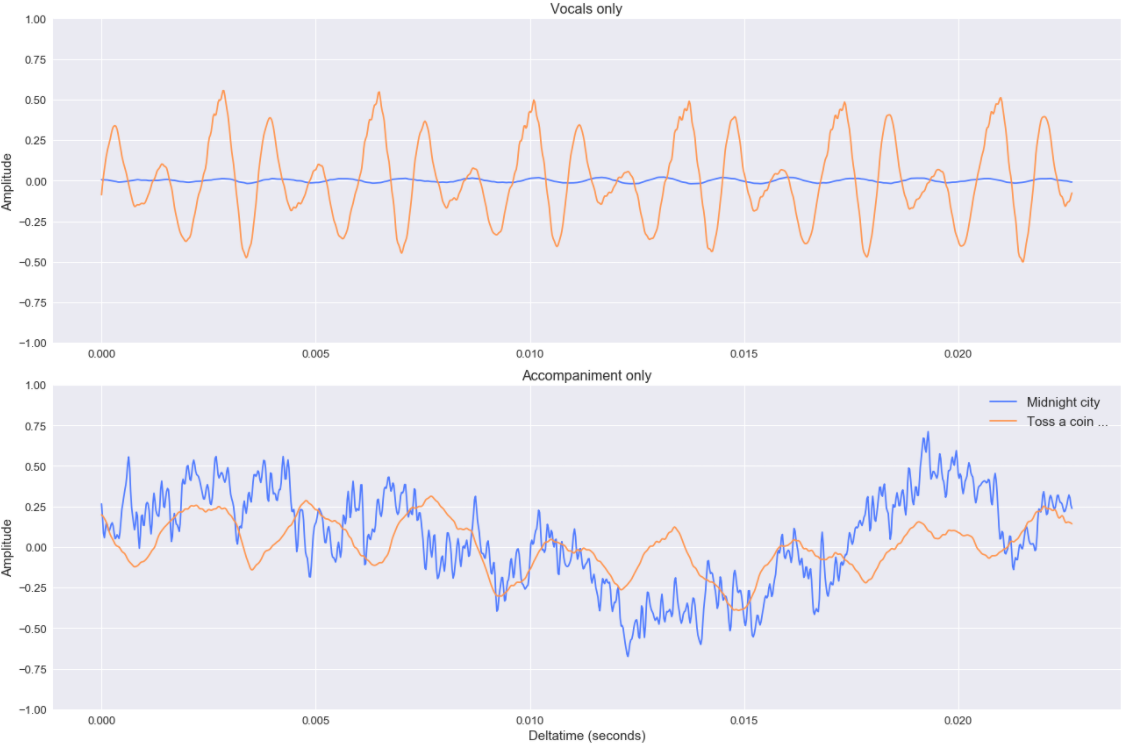

The signals are less complicated from a general point of view but still very noisy. (and zoom is still illustrating that).

Still, I think that this is kind of separation can be useful in the context of building tracks based on the song’s amplitude.

Let’s start now to cross the data from the Beat Saber track and the song.

Dive in the Beat Saber side

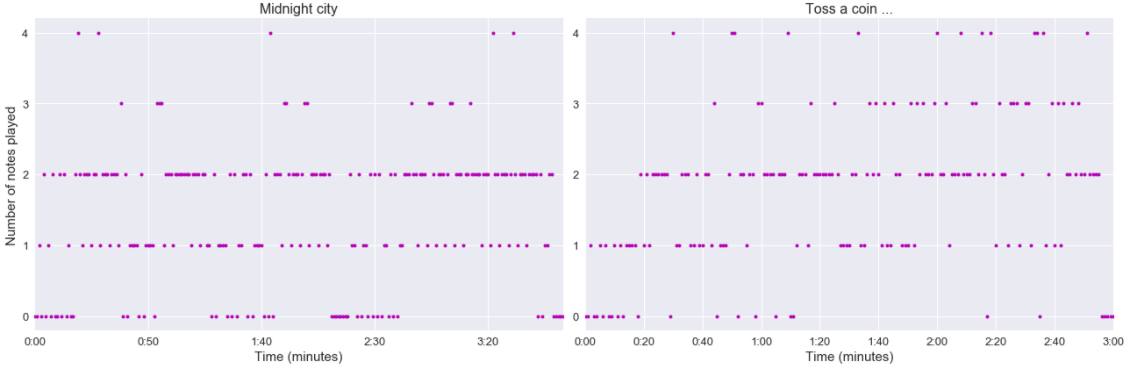

From the files downloaded from bsaber.com, I have the song that I analyzed previously and the levels (with the notes obstacles, etc.). For this article, I am just going to analyze the normal track and the number of notes posted per second on this track (not the kind of notes).

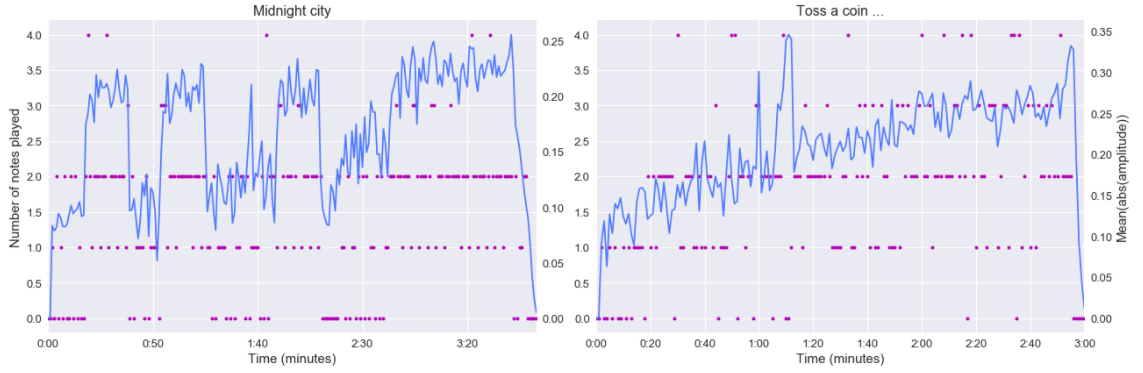

As we can see, there is multiple phasis on the notes displayed per second in the songs, but let’s add the amplitude of the songs in this figure. By the way, to compare the amplitude at a second scaled, I needed to make an aggregation per second (with an average of the absolute value of the amplitude).

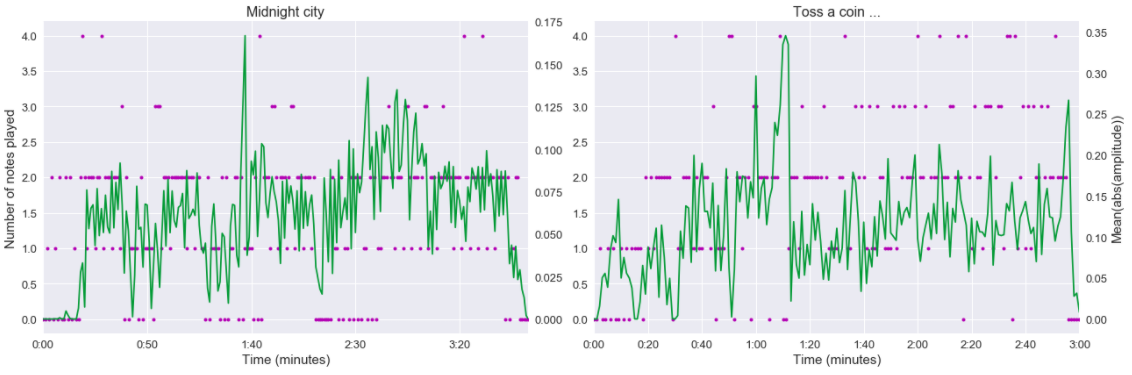

The number of notes played seems to be highly dependent on the amplitude of the signal. In Midnight city, we can distingue the period of calm wit the 0 notes played and the more intense period with a higher amplitude. For the witcher, the analysis is less clear, but still, we can see some phasis in the notes apparition. Let’s now focus on the vocal.

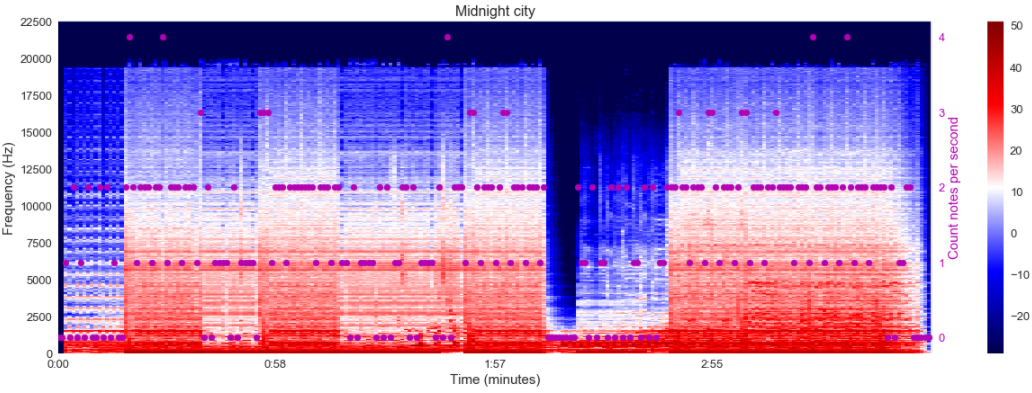

From this analysis, the track on Midnight city doesn’t look affected by the lyrics, and for the witcher, there is a notable impact of the vocals around one minute. To finish, I am going to cross the spectrogram with the notes played (still I needed to make an aggregation per second, I decide in this case to take the max).

From the spectrogram of Midnight city, there is an interesting pattern that seems to appear, the area of the song that has more high-frequency seem to have less 0 notes moment that the one with a lower frequency.

For the witcher, the spectrogram is maybe less expressive.

Overall for my point of view, the analysis of the spectrogram can give exciting insight that could be maybe useful to build a model for tracks based on song.

Conclusion

This article was a brief introduction to music analysis; there is a field to mine, and trying to build systems like recommender engine or track builders around that is going to be fun.

For my next steps on these topics:

- Build a more robust scraper to collect cleaner data around user rating

- Make a global analysis of all the songs (based on all the new data collected)

- Analyze deeply the work made by OXAI around track building (they based their system on something initially based on Dance Dance Revolution)

- Dive in the music information retrieval conferences to learn more about this topic

- Build some recommender engine ….